I’ve gotten a lot of comments about the moderation system that I designed for Kwiks so I thought I’d take the time to discuss how it worked and what I built. First, a little background - I was freelancing on Upwork when I met someone working at Kwiks who was recruiting for a team to develop this new social media app similar to TikTok. I liked the vibe and I was looking for something a little more stable so I decided to give it a try. I was the first backend developer on staff and my infrastructure experience put me in charge of the backend and infrastructure right from the beginning of development. During my year at Kwiks I built out their backend and a complex scalable infrastructure designed to reach millions of users across the globe.

The Problem

As Kwiks scaled, we ran into some growing pains and one of those growing pains was content moderation. While we had a system in place allowing administrators to remove content manually doing this manually is not ideal when you’re having tens of thousands of videos uploaded every day because a lot of inapropriate content tends to slip through the cracks. Even though we weren’t being super heavy handed with content moderation and were mostly okay with uploads that were legal and at least somewhat appropriate we were still barraged with a load of harmful content that we didn’t want our users to view or our systems to host and so we needed something better.

As the defacto backend and infrastructure guy the responsibility fell on me to advise how to continue and at first I advised that we needed to hire a resource that could develop an AI/ML product for us that would allow us to filter content when it’s uploaded and so we interviewed multiple people but their timeline and asking price did not align with business goals so once again the responsibility fell on me to ensure that we could figure out a way to do auto content moderation as we continued to scale up. Now, at the time I was pretty new to AI/ML tools available in the market my experience was pretty much messing around with ChatGPT and using CoPilot in my editor so I had to do a bit of research.

The Solution

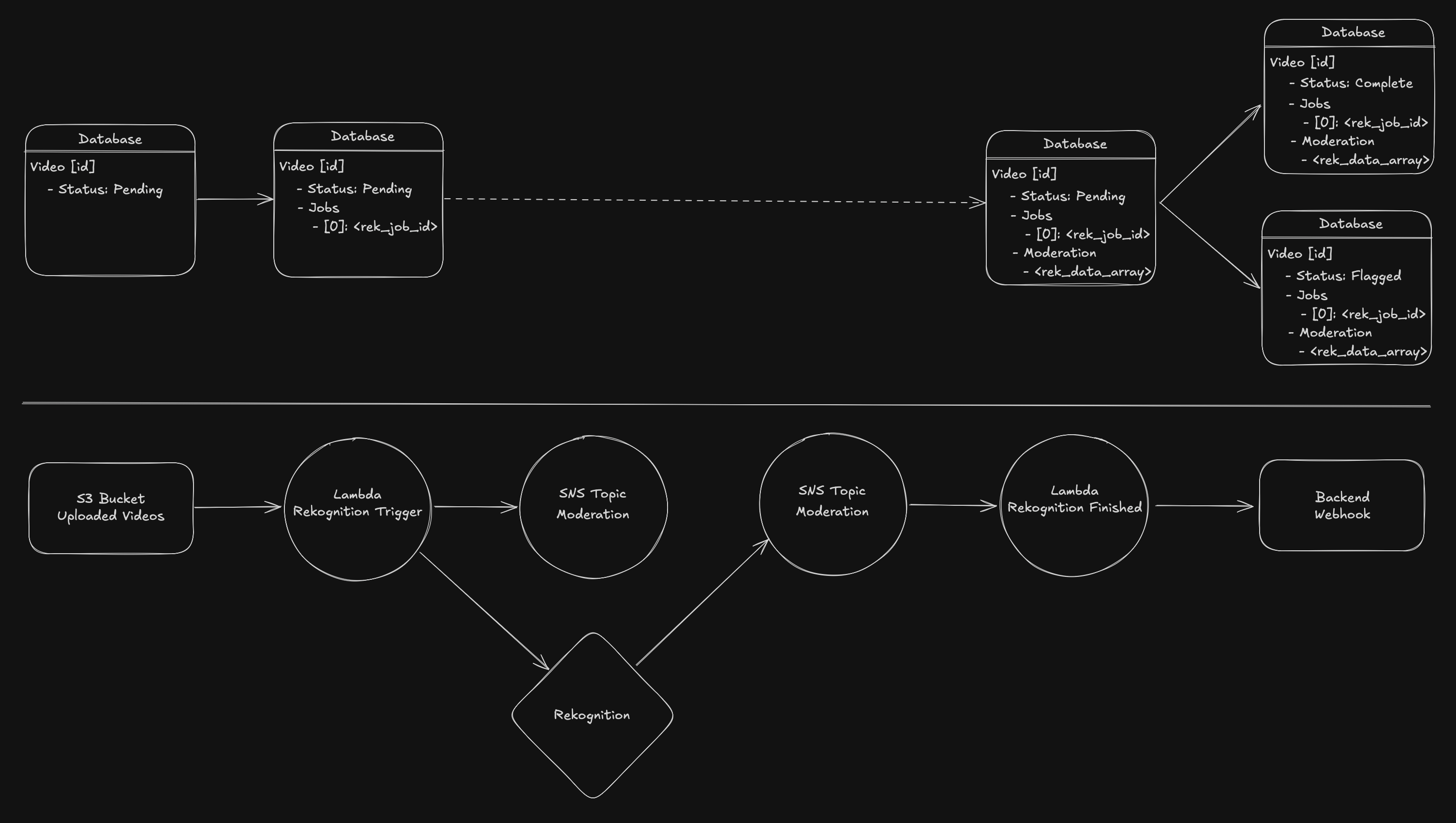

After researching available tools I stumbled on AWS Rekognition and while there wasn’t a lot of good documentation for this tool at the time it seemed like the perfect solution since we were already using many AWS services and our videos were hosted in AWS S3 buckets making integration seemingly simple.

The first step was setting up an IAM role for Rekognition to access the data within the S3 buckets so that it could use the videos inside. This took a lot of fiddling to get right because of the lack of documentation - it was a lot of guess work! I’m sure it’s easier now. The second step was setting up an SNS topic so that we can be notified when the Rekognition task has completed because Rekognition runs async and doesn’t have a determinate time of completion though it’s usually not very long which is helpful because we need to hold uploaded videos until content moderation has completed.

As you can see in the top section of the graphic above, the video object that tracks the file as it moves through the system has a status field that starts out as pending when the video is uploaded. This has more statuses for the uploading process too but we use this database object to tell the uploader what’s happening with their video. When the frontend reads the pending status we let the user know that the video is moving through our systems and it should be available soon. We also store the JobId of the Rekognition job for good measure in case we need to go look at what happened to a particular video. Once the video has finished initial upload and this status is set our Lambda function is triggered automatically with information about the file such as name and key allowing us to pass that off to Rekognition to kick off the content moderation detection. We also pass along the SNS topic to Rekognition so that it can signal completion when it’s done.

When Rekognition completes the SNS topic triggers the “Rekognition Finished” lambda which sends a request for the Rekognition data that was generated from the video. We take that information and process the data to be a bit more simple becacuse in our case we didn’t care about where the occurances were or anything like that we just needed to know if the video did include inappropriate content. Basically, we boil it down to a list of strings, then save it to the video object in the database.

Once we have all of the data found and condensed the lambda calls a web hook in the backend so that the backend can do further processing on the video and notify the user about their video status. Basically, we have a list of tags that are not allowed on the platform and if any of the tags that Rekognition found are in that list we inform the user that their content has been removed for violating our content guidelines and we mark the video as flagged so that we can look in to it further in the future and take action if needed. If the video was approved, we also want to let the user know that their video is available so that they know when their content went live.

The Outcome

Our leadership team had some doubts about the effectiveness of this solution and so we did extensive testing to ensure that the process would work and work well. During my manual testing I realized just how good AWS Rekognition was - it was not only very accurate but also very fast. Instead of explaining this to our leadership team I invited them to test it live during a demo and they were blown away with how well everything worked. We ran this tool in production for several months as the platform expanded and everything was smooth and in great shape up until the end.

I think this is a good example of using a tool vs. building one - sometimes it’s more efficient to build a tool and sometimes it’s more efficient to use an exsisting tool. When the time and money it takes to build the tool far exceeds the cost of using an existing one you can put off building something until the cost and time trade-off evens itself out. If we had expanded Kwiks to the level of TikTok then AWS Rekognition would no longer be cost effective but we would have the resources to hire and wait for a more custom tailored solution to be developed for us but at the time as a young, scrappy startup this solution ended up being ideal for our use case.